Scraping Open Table data with Selenium and Beautiful Soup

Lee Hawthorn September 16, 2020 #PythonIn this article we'll scrape data from Open Table for restaurants in Liverpool.

As Open Table uses JavaScript to produce pages we need to use Selenium.

I'm using Ubuntu 20.04 for this code.

Assuming you already have Python 3 installed.

Here are the other install steps:

- Download and Install the Firefox Geckodriver by running these commands in your terminal.

#!/usr/bin/env bash

# get latest - https://github.com/mozilla/geckodriver/releases

:///////.23.0/-.23.0-

- -*

+

////

- Install additional packages if you don't already have them.

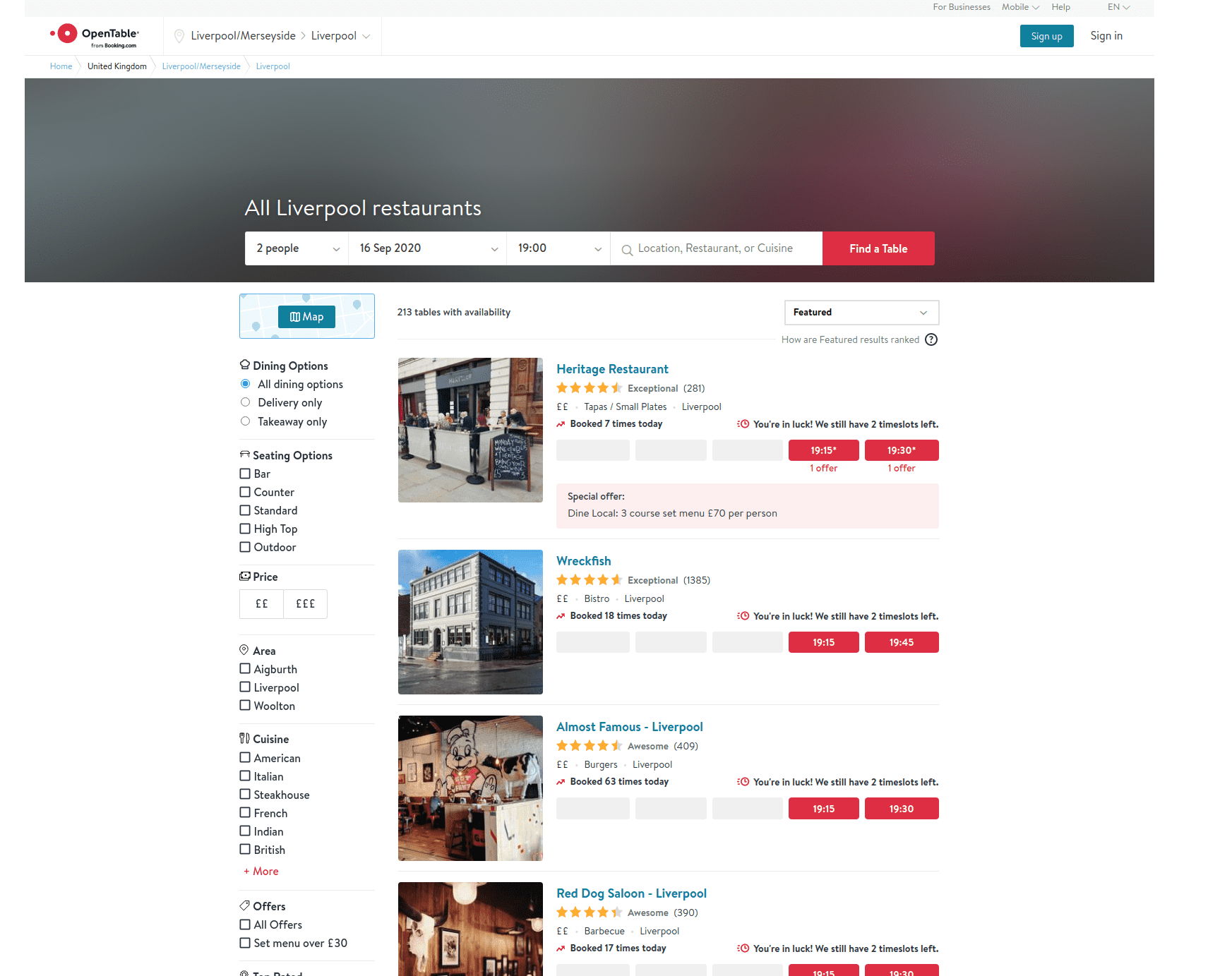

Open Table is a good source of restaurant info. We can see restaurant details like bookings, reviews, cuisine.

We need to browse to the 'listing' page for a specific area.

We can scrape data off each page using a combination of Selenium to navigate the site and Beautiful Soup to parse the page.

The page parser is a little complex as we need to use regex to extract booking number and review count from the spans. We also need to check each field, returning NA when no value is found.

Note, this code needs to be maintained as the site changes, hence, it may not work when you're reading this post.

"""Parse content from various tags from OpenTable restaurants listing"""

, = ,

=

= .

=

=

=

=

=

=

=

= .

= .

=

return

The only other task is to use Selenium to click through the pages, downloading each one. This isn't overly complex. We're just clicking the next page and counting the pages as we go. Note, we're adding the header by checking for the first page. I added a try/except as the last page doesn't have a Next button to click.

# store Liverpool restuarant results by iteratively appending to csv file from each page

=

=

= = 0

= True

=

break

+= 1

+=

=False

=

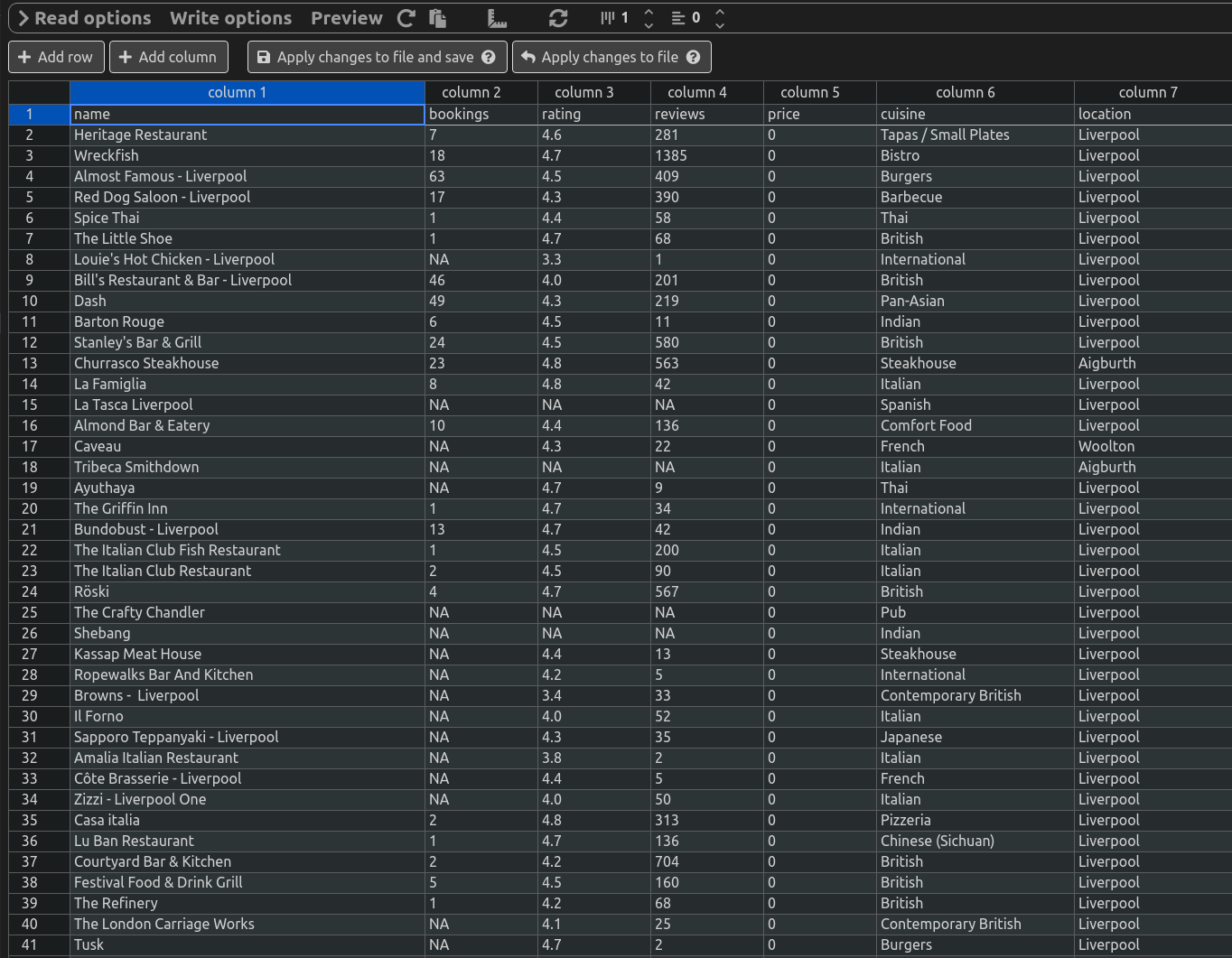

Here's some data from the CSV file.